- Could the Living Algorithm possess Evolutionary Potentials?

- Living Algorithm's Predictive Power applied to the External Environment

- Predictive Cloud: Computational Backdrop for Expectation-based Emotions?

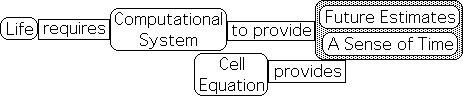

- Could Living Algorithm provide Life with a Sense of Time?

- How Living Algorithm mathematics could supply Temporal Sense

- Living Algorithm's Data Stream Acceleration as a Noise Filter

- Living Algorithm System has the streamlined operations that Evolution prefers.

- Preliminary Comparisons: the Living Algorithm vs. Probability, Physics & Electronics

- Summary

Could the Living Algorithm possess Evolutionary Potentials?

Living Algorithm?

A special equation whose sole function is digesting data streams.

The Living Algorithm's digestive process provides the rates of change (derivatives) of any data stream. These metrics/measures contain meaningful information that living systems could easily employ to fulfill potentials, such as survival.

Living Algorithm System?

A mathematical system based in the Living Algorithm's method of digesting data streams.

In the prior monograph, the Triple Pulse of Attention, we saw that the mathematical behavior of the Living Algorithm System exhibited patterns of correspondence with many aspects of human behavior. Specifically, the Living Algorithm's Triple Pulse paralleled many sleep-related phenomena. This intriguing synergy between math and scientific 'fact' led us to ask the Why question. Why does the linkage exist? Is there a conceptual model that could help explain this math/data synergy?

* Many of these diagrams substitute the term 'Cell Equation' for 'Living Algorithm'. Why? When creating the diagrams, 'Cell Equation' was the name I employed for the algorithm. Pardon the inconvenience.

Could the Living Algorithm be Life's Computational Tool?

Life and the Living Algorithm are compatible in many ways. As such, the Living Algorithm is the ideal type of equation to model living systems. Further, the Living Algorithm has many features that are useful to Life. Taking this line of reasoning a step further, we ask the question: could it be that the Living Algorithm doesn't just model Life, but that living systems actually employ the Living Algorithm's algorithm to digest sensory data streams? In other words, could the Living Algorithm be Life's computational tool?

![]()

Life needs predictive descriptors concerning future moments.

Is there any evidence that Life employs the Living Algorithm to digest data streams?

The initial article in this monograph developed the notion that living systems require a Data Stream Mathematics that provides ongoing up-to-date descriptions of a flow of environmental information. Life needs these descriptors to approximate the future. These approximations enable living systems to make the necessary adjustments to maximize the chances of fulfilling potentials, including survival. This ability to approximate the future applies to a wide range of behaviors – everything from the regulation of hormonal excretions to the ability to capture prey or escape from predators.

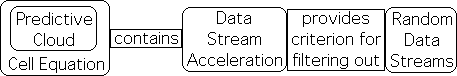

Predictive Cloud qualifies the Living Algorithm for position as Life's Operating System.

The prior article, The Living Algorithm System, argued that the Living Algorithm's Predictive Cloud provides viable estimates about future performance. As mentioned, Life requires a mathematical system that provides these future estimates. In this way, the Living Algorithm System fulfills this particular requirement for a mathematics of living systems. The existence of these talents provides preliminary support for the notion that the Living Algorithm could be the method by which living systems digest data streams.

Could the Living Algorithm provide the computational backdrop for evolutionary processes?

If the Living Algorithm is really one of the ways in which living systems digest data streams, could the Living Algorithm have evolutionary potentials as well? Why else would this computational ability be passed on from generation to generation? If it is indeed a computational tool of living systems, the Living Algorithm should also provide an essential mathematical backdrop that is crucial for the evo-emergence of many of Life's complex features.

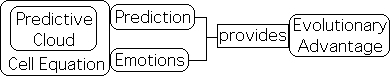

Could the Living Algorithm's Predictive Cloud provide an evolutionary advantage?

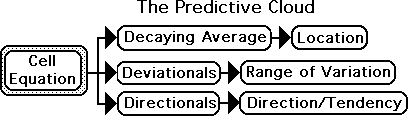

The Living Algorithm's Predictive Cloud is the collection of derivatives (rates of change) that surround each data point - each moment. This feature is of particular significance because the Predictive Cloud consists of predictive descriptors. In the prior article, we saw that these predictive descriptors could be very useful to Life on a moment-to-moment level. Could these predictions concerning environmental behavior confer an evolutionary advantage as well? Is it possible that knowledge of the Living Algorithm’s Predictive Cloud could further the chances of survival for the myriad biological forms? Does the Predictive Cloud provide an indication of the evolutionary potentials of the Living Algorithm System?

We don't know. You must read on to find out.

Living Algorithm's Predictive Descriptors applied to the Environment

Why may it be possible that the Living Algorithm’s predictive descriptors provide an evolutionary advantage?

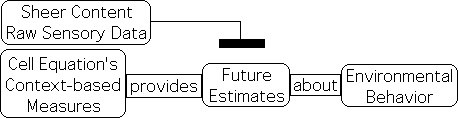

The Sheer Content Approach (raw data plus memory) provides no Predictive Ability.

The Living Algorithm’s predictive power is based upon the ongoing contextual features of the data stream. The content-based approach (the raw data combined with memory) is certainly the simplest system. It requires no storage memory and has no computational requirements. However, because the ongoing instantaneous nature of the information is devoid of context, employing the most recent instant to make predictions about the future is like shooting in the dark. The likelihood of a hit is so small, as to approach the improbable.

Living Algorithm's context-based measures provide future estimates.

The Living Algorithm’s digestion process provides crucial knowledge about the features of any data stream. This digestion process generates ongoing, up-to-date rates of change (derivatives) that provide context. These contextual features provide predictive powers that are far superior to that provided by sheer content alone (the raw data combined with memory).

Living Algorithm’s Predictive Cloud provides information about location, range and tendency of the next data point.

The most basic of these features is the trio of central measures referred to as the Predictive Cloud. The Cloud's predictive power has many uses. On the most basic level the Cloud provides information as to probable location, range and direction of the prey/predator. The Living Average indicates the most probable location for the next piece of data; the Deviation, the range of variation; and the Directional, the tendency and probable direction of the data stream. This trio of central measures provides incredible predictive power regarding the next data point.

Could Knowledge of Data Stream’s Features provide an evolutionary advantage?

Could knowledge of the Predictive Cloud's metrics/measures regarding the ongoing flow of environmental data provide an essential evolutionary advantage? Could an organism, whether cell or human being, make more accurate predictions about the future with an understanding of these ongoing mathematical features of the myriad environmental data streams?

Examples of the Evolutionary Power of the Predictive Cloud's Trio of Measures: Predator/Prey

Let’s explore some examples of the predictive power of the trio of measures that constitute the Predictive Cloud. An ongoing knowledge of this trio of central measures would provide invaluable information to the prey in terms of probable range, direction, acceleration, and actual location of a moving predator. Vice versa these measures would provide invaluable information to the predator in terms of the probable location of an escaping prey. (The dot in the diagram indicates the probable location, the circle: the range, and the arrow: the direction of the next data point.)

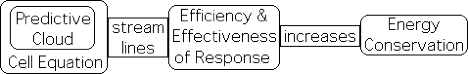

Knowledge of Probable Outcome increases Effectiveness & Efficiency of Response

An organism could make a more efficient and effective response to environmental input with a knowledge of probable outcome. For example, the ability to better predict location, range and direction of motion would allow the predator/prey to capture/escape more frequently. It seems safe to say that the better the organism's predictions are, the greater the chance of survival. This would apply to any organism. In short, the knowledge of the ongoing contextual features of any data stream enables any organism to make conscious, subconscious or hard-wired choices that further the chances of survival.

Cloud's Predictive Capacity could also lead to Energy Conservation

The knowledge of probable outcome supplied by the Predictive Cloud also enables the organism to conserve energy. Instead of wasting energy in the unguided attempt to procure food or sexual partners, the organism would only expend valuable energy when the Predictive Cloud indicates a greater chance of success. Of course, energy conservation is a key evolutionary talent.

Predator/prey arms race includes computational ability

In addition to physical capabilities such as size and strength, it seems evident that the predator/prey evolutionary arms race would have to include the computational ability to make probabilistic predictions about the future. Further the refinement of this essentially mathematical skill has no end. While strength and size have limits imposed by physical requirements, neural development is virtually unlimited, as witnessed by these words. The continuous refinement of this computing advantage, whether through experience, evolution, or emergence, would enable the organism to maximize the impact of the response, while minimizing energy expenditure – an essential evolutionary ability. Of course this refinement of computational abilities could apply to the Living Algorithm's multitude of potentials.

![]()

Predictive Cloud: Computational Backdrop for Expectation-based Emotions?

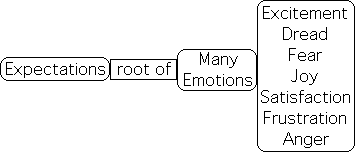

The Predictive Cloud generates Expectations.

As mentioned, an ongoing knowledge of the Living Algorithm’s Predictive Cloud, the aforementioned trio of measures, could be employed as an invaluable predictive tool. On more complex levels, these same measures could easily supply the essential computational backdrop for the development of our emotions. Let us offer some cursory remarks in this regard. The Cloud provides information that could enable an organism to anticipate and prepare for the future. Anticipation morphs into expectation.

![]()

Expectations charged with Emotion.

As mentioned, an ongoing knowledge of the Living Algorithm’s Predictive Cloud, the aforementioned trio of measures, could be employed as an invaluable predictive tool. On more complex levels, these same measures could easily supply the essential computational backdrop for the development of our emotions. Let us offer some cursory remarks in this regard. The Cloud provides information that could enable an organism to anticipate and prepare for the future. Anticipation morphs into expectation.

Emotions have evolutionary purpose – Reinforce Memory.

In brief, the Cloud’s measures of data stream change are emotionally charged because they determine expectations concerning the future. The investment of emotion into information, whether memories or data, has an evolutionary purpose – reinforce memory.

![]()

Emotionally charged memories are easier to recall.

This emotional content renders the information easier to remember. This is not mere speculation. Cognitive studies have shown that memory and emotions are linked. Information's meaning is invested with emotion because it is relevant to our existence. As such, a random set of numbers is difficult to remember.

Life’s best interests to be aware of Living Algorithm’s Predictive Cloud

It’s clearly evident that the Living Algorithm’s Predictive Cloud could provide an obvious evolutionary advantage to living systems. The accuracy of future estimates is increased via the application of a simple and replicable algorithm. The Cloud's estimates of future performance could be employed to predict environmental behavior. Better predictions increase the efficiency and effectiveness of our energy usage. This conservation of energy certainly provides an evolutionary advantage. Further, the Cloud's trio of predictors could easily generate the expectations that are the base of many emotions. Emotions have an evolutionary purpose, as they are associated with heightened retention and recall associated with memory.

Life must employ Living Algorithm to obtain Cloud's predictive power.

This discussion suggests that it is in Life's best evolutionary interests to have knowledge of the 3 ongoing and up-to-date measures that the Living Algorithm provides. But to have access to this predictive power, Life must employ the Living Algorithm to digest data streams.

Could the Living Algorithm provide Life with a Sense of Time?

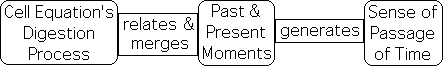

Living Algorithm's process, relating past to present, supplies a sense of passage of time.

Besides predictive power, the Living Algorithm's method of digesting information also supplies a sense of the passage of time. The Living Algorithm's repetitive/iterative process relates past data to the current data, with the present being weighted the most heavily. This relating process, which merges the past with the present, confers a sense of the passage of time. Similarly, a cartoon consists of a series of related images.

The flow of digested sensory information only makes sense over time.

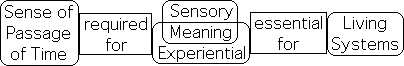

The sense of time passing is important to living systems for multiple reasons. A primary reason is that the flow of digested sensory information only makes sense over time. Time duration is required to derive meaning from individual sensations. Isolated sensory input makes no sense by itself. For instance, an isolated sound without temporal context is neither music nor a word. Even a picture takes time to digest, no matter how brief. A sustained image over time is required to identify objects. The sense of smell, supposedly the first sense, requires a duration of some kind to differentiate the potentially random noise of a single scent from the organized meaning of a sustained fragrance.

Organisms require a sense of time to differentiate random noise from an organized signal.

A sense of time is required to experience the meaning of a signal. If an organism existed in the state of sheer immediacy, it would automatically respond to environmental stimuli – tit-for-tat – just as matter does. But to make any kind of sense out of a sensory message, the organism requires an elemental sense of the passage of time. The organism must be able to pay attention to the sensory translation for a sufficient length of time to determine if the message indicates food, foe, or sexual partner. Otherwise the raw sensory information is just random garble. It is evident that the ability to sense the passage of time is essential if we are to experience the information behind sensory input.

Sense of Time, not just evolutionary talent, but a requisite talent of Living Systems

Further, when an organism must make choices based upon sensory input to maximize the chances of survival, a sense of time is required to even begin comparing alternatives. It seems that a sense of time is not just an evolutionary talent, but a requisite talent for all living systems. For an organism to both have a sense of time and make educated guesses about the future, it seems that living systems must have emerged with some sort of computational talent. This computational talent could be employed to digest the sensory data streams that are in turn derived from the continuous flow of environmental information. The Living Algorithm's method of digesting data streams provides both future estimates and a sense of time.

Is it possible that the Living Algorithm & Life emerged together?

If the ability to digest information and transform it into a meaningful form is indeed an essential characteristic feature of living systems, could the Living Algorithm and Life have emerged from the primordial slime together?

![]()

How Living Algorithm mathematics could supply Life with a Temporal Sense

How does the Living Algorithm provide a sense of time?

The Living Algorithm's digestion process generates a sense of time by merging and relating the present moment with past moments.

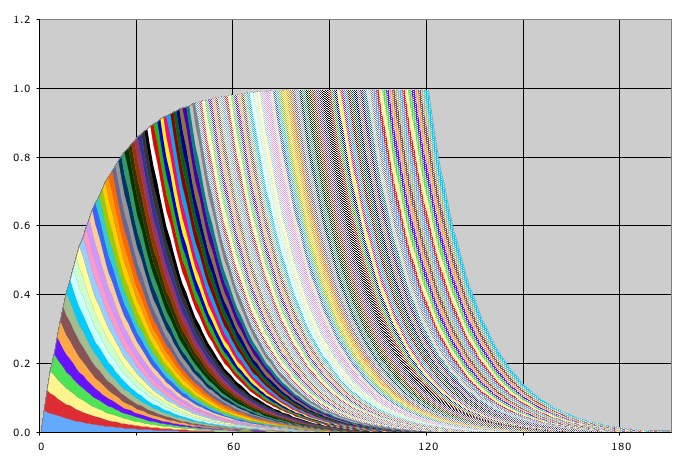

Data's impact decays over time.

How is this blending of past and present accomplished?

The impact of each data byte decays over time. This process is illustrated in the graph at right. Each color swatch represents the impact of an individual data bit as it decays over time. The x-axis represents 180 repetitions of the Living Algorithm's mathematical process. Notice how each moment includes many colors. This indicates the impact of prior and current data bits upon the current moment.

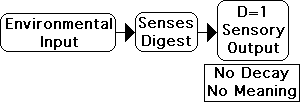

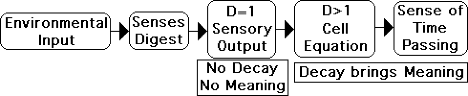

For Raw Sensory Output, the Decay Factor = 1: no time, hence no meaning.

How is decay incorporated into the mathematical process?

The Living Algorithm's Decay Factor supplies this function. Let's see how.

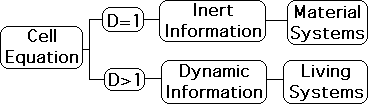

The senses digest continuous environmental input to transform it into digital form. However this digital form has no meaning. Each byte of information is isolated from the rest. At this point in the digestion process, the Decay Factor = 1, which means that there is no decay. With no decay there is no relationship between the data points. With no relationship, there is no sense of time. Without a duration of time the sensory output - the translated environmental information – makes no sense. In summary, when the Decay Factor is one (D=1), there is no time, hence no meaning.

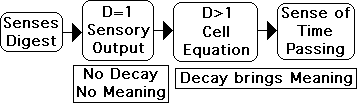

Living Algorithm digests sensory input. The Decay Factor > 1: Time, hence potential meaning

To provide a sense of time, hence meaning, the sensory data streams require another level of digestion. The senses transform environmental information into sensory data streams. The Living Algorithm's digestion process relates the isolated points in the sensory data stream to create a sense of time. This relating process automatically occurs when the Decay Factor is greater than 1 (D>1). The Living Algorithm's digestion process generates a sense of time passing, which simultaneously imparts the potential for meaning.

Living Algorithm provides Meaningless Sensory Input with Meaning via Time.

To aid retention, let us summarize this important process. Our senses digest continuous environmental input – transforming it into sensory data streams. The isolated instants of these sensory data streams don't have any inherent meaning, because they are not related to each other in any way, as there is no decay (D=1). The Living Algorithm digests sensory data streams. This digestion process relates the isolated instants by introducing decay (D>1), which generates a sense of time. A sense of time is an essence of meaning.

This analysis suggests that it is at least a plausible proposition that the Living Algorithm's digestion process could create the sense of time that is required for meaning. Because living systems must derive meaning from data streams, this lends further support for the notion that Life employs the Living Algorithm to digest data streams.

Material Systems: Inert Information (D=1); Living Systems: Dynamic Information (D>1)

As an aside, material systems do not derive meaning from data streams. As such, material systems only deal with information that is inert. A data stream's information is inert when the Living Algorithm's Decay Factor is one (D = 1). Conversely, a data stream's information is dynamic when the Decay Factor is greater than 1 (D>1). Living systems require dynamic information because it yields meaning. As such, this significant difference between material and living systems is inherent to the Living Algorithm's method of digesting data streams.

Living Algorithm's Data Stream Acceleration as a Noise Filter

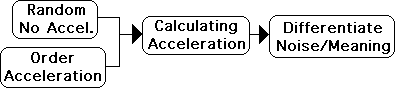

Living Algorithm computes Data Stream Acceleration.

The prior section illustrated how the Living Algorithm could very well provide the computational backdrop for our sense of time. A sense of time is required to derive meaning from sensory input and enable us to compare alternatives. Besides providing the sense of time that imparts meaning to our senses, the Living Algorithm also calculates a data stream's acceleration. In fact, two of the Predictive Cloud's measures are accelerations. Besides providing plausible estimates concerning future performance, knowledge of a data stream's acceleration could enable an organism to filter out random data streams, as meaningless noise. Put another way, data stream acceleration enables an organism to differentiate random noise from a meaningful signal.

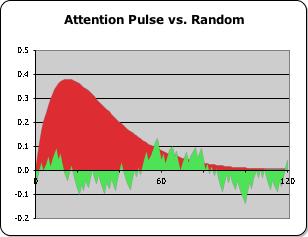

The Acceleration of Organized Data Streams is much greater than a Random Data Stream

The ability to differentiate a random from an organized signal is due to a simple mathematical fact. The random data streams associated with background noise possess an innate and stable velocity, but no acceleration. Conversely, an organized data stream (a string of relatively stable values consistent with ordered environmental input) has a distinct acceleration.

Graph: Random vs. Organized Acceleration

The graph at right exhibits this distinct difference between a random and an organized data stream. The big red curve represents the acceleration of an ordered data stream, the classic Pulse of Attention (120 ones). The erratic green color represents the acceleration of a random stream of zeros and ones. It is immediately apparent that the acceleration of the organized data stream overshadows (rising 3 times higher) the acceleration of a random data stream.

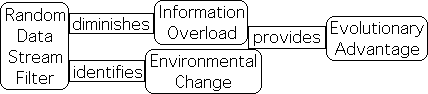

Minimizing Information Overload conserves Energy.

Why is identifying random streams a significant talent? After the senses translate continuous environmental information into sensory data streams, it is essential to first pare out superfluous data streams from consideration. Differentiating random from organized signals is the initial step in the process. This filtering process prevents information overload. The ability to identify and ignore random data streams eliminates an abundance of environmental information from consideration. Minimizing the number of data streams under consideration maximizes the speed and efficiency of response, which of course conserves energy.

Focus upon data stream acceleration enables an organism to identify environmental changes.

The focus upon data stream acceleration as a way of filtering out random signals has other advantages as well. Paying attention to data stream acceleration enables frogs to conserve their energy by only shooting their tongues at bugs, rather than plants. Insects move erratically, and hence with more data stream acceleration, than plants. On more complex levels, focusing upon data stream acceleration allows complex life forms to determine changes in their environment. Perceiving environmental changes, whether auditory, visual, olfactory or tactile, is essential for any organism that must detect an approaching predator, prey, or sexual encounter. Organisms with this sense must somehow have the ability to perform calculations that determine probable quantities that differentiate the random noise of the background environment from the significant accelerations of predator and prey. The simple Living Algorithm supplies the ability to perform these calculations relatively effortlessly.

Random Data Stream Filter both diminishes Information Overload & identifies Environmental Change

It seems that the Living Algorithm-derived random data stream filter could be employed to diminish the amount of incoming data, hence prevent information overload. This same filter could also be employed to identify environmental changes. Both of these computational talents provide an evolutionary advantage.

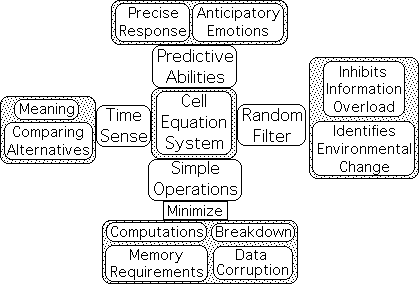

Living Algorithm System has the streamlined operations that Evolution prefers.

The Living Algorithm System provides predictive capabilities. Further, the Living Algorithm's method of merging/relating the past and the present generates a sense of the passage of time. Living systems require a sense of time to derive meaning from the sensory data streams. Finally the Living Algorithm computes data stream acceleration. Knowledge of data stream acceleration could enable an organism to differentiate random from meaningful signals and identify changes in the environment. Each of these talents provides an evolutionary advantage.

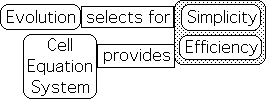

Evolution selects for Simplicity & Efficiency.

Besides providing these advantages, the Living Algorithm satisfies evolution's simplicity requirement. Evolutionary processes select for efficiency and simplicity in order to streamline operations and avoid the corruption of complexity.

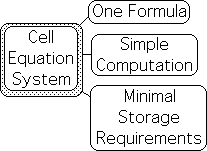

3 ways that the Living Algorithm System provides Simplicity and Efficiency

The Living Algorithm System satisfies these demands in three ways.

- 1) There is only one formula or algorithm. The Living Algorithm’s pattern of commands can be employed on multiple levels to calculate an endless array of predictive measures. In other words, a simple replication of one algorithm provides an abundant source of predictive data streams.

- 2) The necessary computations are quite simple – only employing the operations of basic math.

- 3) The Living Algorithm measures require very little storage space. Rather than storing exact memories of any kind, only the ongoing, digested measures are stored. The present is continually incorporated into the digested past, with the most recent environmental input having the greatest impact. Rather than a complete motion picture, only the most recent composite quantity is stored - discrete pieces of data networks versus a continuous data flow. Movies or music require far more storage capacity than numbers and letters, as anyone knows who attempts to download CDs and DVDs onto their personal computer. Even static pictures require far less storage space. Due to the contextual nature of the information, the storage and retrieval is relatively simultaneous.

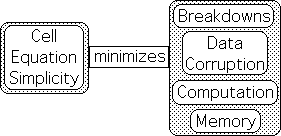

Simplicity minimizes Breakdown, Corruption, Computation, & Memory Requirements

Besides providing computational talents that are crucial for survival, hence provide an evolutionary advantage, it seems that the Living Algorithm System also satisfies the requirement of simplicity. The principle of conservation dictates that Life requires a data digestion system whose features are as economical as possible. This simplicity minimizes breakdown, data corruption, computational and memory requirements. The Living Algorithm’s algorithm is simple; computations are basic; and memory requirements are minimal.

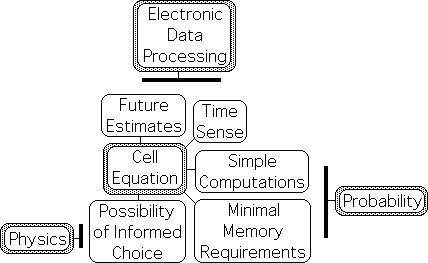

Preliminary Comparisons: the Living Algorithm vs. Probability, Physics & Electronics

We've seen many ways in which the Living Algorithm's Information Digestion System could provide essential evolutionary talents to living systems. How do other methods compare? Let us offer some preliminary remarks in this regard.

Probability’s Computational & Memory Requirements Prohibitive

As seen, the Living Algorithm satisfies the simplicity requirement for living systems in terms of computation and memory. In contrast, Probability has prohibitive computational and memory requirements. No economy whatsoever. This dooms Probability as a computational tool for living systems. To provide predictive descriptors, Probability requires many complicated formulas and operations, not to mention the necessity of a huge and precise database. Further, Probability only makes predictions about the general features of the set, not the ongoing moments. This method also weights past and present data points equally. While Probability’s descriptors provide estimates of the future, these estimates are based upon what was, rather than what is happening now. Besides being more economical in terms of operations and memory requirements, the Living Algorithm System provides more up-to-date predictions about the future than does Probability.

Electronics provides no future estimates, nor any sense of time.

Electronics provides another system that is in the running for the position as Life’s computational tool. Electronic data processing is different in many fundamental ways from the type of data digestion provided by the Living Algorithm. The function of electronic data processing is to transmit environmental or internal input as accurately as possible through noise reduction. Shannon, the father of information theory, studied this type of processing regarding the clarity of electronic transmissions, such as radio, television, computers, and spacecraft. Accuracy is of utmost importance in electronic transmissions, as Internet users well know. In this case, the internal codes that are required to ensure accuracy only predict the most usual forms that the message could take – as a type of redundancy testing. This type of data processing requires standards of expectations to establish redundancy patterns. However, this method does not provide any predictive abilities – no estimates concerning future performance – and certainly no room to move. Further, electronic information processing does not relate the data. Without a relation between the past and the present, there is no sense of time pass passing. Hence, electronic information has no meaning. Electronics imparts accuracy; humans impart meaning. And the Living Algorithm provides the type of information digestion to impart that meaning.

Physics doesn't incorporate possibility of Informed Choice.

Physics provides yet another alternative for determining living behavior. Given the initial conditions, say the Big Bang, and the appropriate equations, Physicists can precisely predict the behavior of material systems. If living systems have no ability to adjust to external circumstances, and instead respond automatically to environmental stimuli, then Physics is still in the running for the position as Life’s computational tool. If, however, living systems have the ability to make adjustments that facilitate survival, then they need a mechanism that will provide predictive powers and a sense of time. If this is the true state of things, then Physics is out of the running, as all operations are automatic. We will deal with these topics in more depth in the article on Informed Choice.

Summary

The Living Algorithm: an ideal evolutionary tool due to predictive capacity & ease of use.

The Living Algorithm is an ideal evolutionary tool due to its predictive capacity, minimal memory requirements and ease of use. The Living Algorithm’s Predictive Cloud easily characterizes the moment and provides pragmatic estimates about the immediate future. Articulating the relationship between moments reveals patterns. Moment-to-moment updating discloses changes in these same patterns. Both are crucial abilities for any organism. The Living Algorithm provides both of these functions. If living systems employ the Living Algorithm to digest environmental data streams, it seems reasonable to assume that this would impart a huge evolutionary advantage.

Living Algorithm's relating process provides a Sense of Time.

Besides this predictive capacity, the Living Algorithm's relating process, whereby past information is related to current information, provides living systems with a sense of time. A sense of time is a requisite talent for interpreting the sensory input from the environment. Without the ability to experience this sensory information over time, the environmental input becomes meaningless noise. Without access to meaningful information, an organism cannot respond effectively to environmental stimuli and perishes. Besides separating living matter from inert matter, a sense of time provides a serious evolutionary advantage.

Data Stream Acceleration provides a random filter.

Finally, the Living Algorithm's information digestion process also generates the acceleration of any data stream (one of the features of the Predictive Cloud). Data stream acceleration could easily provide the computations that enable an organism to differentiate a random from an organized signal. This talent diminishes the possibility of information overload. Eliminating superfluous information from consideration certainly provides an evolutionary advantage. As a significant side benefit, knowledge of data stream acceleration also enables an organism to identify environmental change. Identifying change could also signify the need for an appropriate response, another significant evolutionary advantage. It seems evident that if Life employed the Living Algorithm's digestion process that it could provide a multitude of evolutionary advantages.

Link

To further illustrate the pragmatic nature of the Living Algorithm's Predictive Cloud, the next article in the stream explores a concrete example from the sport of baseball – the batting average. In the process we will see how Probability & Living Algorithm are Complementary Systems.